Introduction

This Part has been moved tohttps://wasmedge.org/docs/develop/overview. Please use our new docs.

Quick Start

This part has been moved to https://wasmedge.org/docs/category/getting-started-with-wasmedge. Please use our new docs.

WasmEdge Installation And Uninstallation

This part has been moved to https://wasmedge.org/docs/start/install. Please use our new docs.

Using WasmEdge in Docker

This part has been moved to https://wasmedge.org/docs/develop/deploy/kubernetes/docker-slim. Please use our new docs.

Running WASM with WasmEdge CLI

This part has been moved to https://wasmedge.org/docs/start/build-and-run/cli. Please use our new docs.

Execution in AOT Mode

This part has been moved to https://wasmedge.org/docs/start/build-and-run/aot. Please use our new docs.

WasmEdge Features

This Part has been moved tohttps://wasmedge.org/docs/start/wasmedge/features. Please use our new docs.

Supported WASM And WASI Proposals

This part has been moved to https://wasmedge.org/docs/start/wasmedge/extensions/proposals. Please use our new docs.

WasmEdge Proprietary Extensions

This part has been moved to https://wasmedge.org/docs/develop/wasmedge/extensions/unique_extensions. Please use our new docs.

WasmEdge Integrations

This part has been moved to https://wasmedge.org/docs/start/wasmedge/integrations. Please use our new docs.

Supported Platforms

This part has been moved to https://wasmedge.org/docs/start/wasmedge/features#cross-platform. Please use our new docs.

Comparison

// TODO: TBD in New Docs - @adithyaakrishna

WasmEdge Use Cases

This part has been moved to https://wasmedge.org/docs/start/usage/use-cases. Please use our new docs.

Server Side Rendering Modern Web UI

Traditional web applications follows the client-server model. In the past era of application servers, the entire UI is dynamically generated from the server. The browser is simply a thin client that displays the rendered web pages at real time. However, as the browser becomes more capable and sophisticated, the client can now take on more workload to improve application UX, performance, and security.

That gives rise to the era of Jamstack. There is now a clear separation between frontend and backend services. The frontend is a static web site (HTML + JavaScript + WebAssembly) generated from UI frameworks such as React.js, Vue.js, Yew or Percy, and the backend consists of microservices. Yet, as Jamstack gains popularity, the diversity of clients (both browsers and apps) makes it very difficult to achieve great performance across all use cases.

The solution is server-side rendering (SSR). That is to have edge servers run the "client side" UI code (ie the React generated JavaScript OR Percy generated WebAssembly), and send back the rendered HTML DOM objects to the browser. In this case, the edge server must execute the exact same code (i.e. JavaScript and WebAssembly) as the browser to render the UI. That is called isomorphic Jamstack applications. The WasmEdge runtime provides a lightweight, high performance, OCI complaint, and polyglot container to run all kinds of SSR functions on edge servers.

- React JS SSR function

- Vue JS SSR function (coming soon)

- Yew Rust compiled to WebAssembly SSR function (coming soon)

- Percy Rust compiled to WebAssembly SSR function

We also exploring ways to render more complex UI and interactions on WasmEdge-based edge servers, and then stream the rendered results to the client application. Potential examples include

- Render Unity3D animations on the edge server (based on WebAssembly rendering of Unity3D)

- Render interactive video (generated from AI) on the edge server

Of course, the edge cloud could grow well beyond SSR for UI components. It could also host high-performance microservices for business logic and serverless functions. Read on to the next chapter.

Microservices

The edge cloud can run application logic microservices very close to the client device.

- The microservices could be stateless computational tasks, such as AI inference and stream data analysis, which offload computation from the client.

- The microservices could also interact with data cache services that sync with backend databases.

The edge cloud has advantages such as low latency, high security, and high performance. Operationally, WasmEdge can be embedded into cloud-native infrastructure via its SDKs in C, Go and Rust. It is also an OCI compliant runtime that can be directly managed by container tools as a lightweight and high-performance alternative to Linux containers. The following application frameworks have been tested to work with WasmEdge-based microservices.

Dapr (Distributed Application Runtime)

Service mesh (work in progress)

- Linkerd

- MOSN

- Envoy

Orchestration and management

Serverless Function-As-A-Service in Public Clouds

WasmEdge works with existing serverless or Jamstack platforms to provide a high-performance, portable and secure runtime for functions. It offers significant benefits even when it runs inside Docker or microVMs on those platforms.

AWS Lambda

Tencent Serverless Functions

Vercel Serverless Functions

Netlify Functions

Second State Functions

Serverless Software-As-A-Service Functions

WasmEdge can support customized SaaS extensions or applications using serverless functions instead of traditional network APIs. That dramatically improves SaaS users' and developers' productivity.

- WasmEdge could be embedded into SaaS products to execute user-defined functions. In this scenario, the WasmEdge function API replaces the SaaS web API. The embedded WasmEdge functions are much faster, safer, and easier to use than RPC functions over the web.

- Edge servers could provide WasmEdge-based containers to interact with existing SaaS or PaaS APIs without requiring the user to run his own servers (eg callback servers). The serverless API services can be co-located in the same networks as the SaaS to provide optimal performance and security.

The examples below showcase how WasmEdge-based serverless functions connect together SaaS APIs from different services, and process data flows across those SaaS APIs according each user's business logic.

Slack

Lark

It is also known as 飞书 aka the Chinese Slack. It is created by Byte Dance, the parent company of Tiktok.

WasmEdge On Smart Devices

Smart device apps could embed WasmEdge as a middleware runtime to render interactive content on the UI, connect to native device drivers, and access specialized hardware features (i.e, the GPU for AI inference). The benefits of the WasmEdge runtime over native-compiled machine code include security, safety, portability, manageability, OTA upgradability, and developer productivity. WasmEdge runs on the following device OSes.

With WasmEdge on both the device and the edge server, we can support isomorphic Server-Side Rendering (SSR) and microservices for rich-client mobile applications that is both portable and upgradable.

JavaScript or Domain Specific Language Runtime

In order for WebAssembly to be widely adopted by developers as a runtime, it must support "easy" languages like JavaScript. Or, better yet, through its advanced compiler toolchain, WasmEdge could support high performance DSLs (Domain Specific Languages), which are low code solutions designed for specific tasks.

JavaScript

WasmEdge can act as a cloud-native JavaScript runtime by embedding a JS execution engine or interpreter. It is faster and lighter than running a JS engine inside Docker. WasmEdge supports JS APIs to access native extension libraries such as network sockets, tensorflow, and user-defined shared libraries. It also allows embedding JS into other high-performance languages (eg, Rust) or using Rust / C to implement JS functions.

- Tutorials

- Examples

- WasmEdge’s embedded QuickJS engine

DSL for image classification

The image classification DSL is a YAML format that allows the user to specify a tensorflow model and its parameters. WasmEdge takes an image as the input of the DSL and outputs the detected item name / label.

DSL for chatbots

A chatbot DSL function takes an input string and responds with a reply string. The DSL specifies the internal state transitions of the chatbot, as well as AI models for language understanding. This work is in progress.

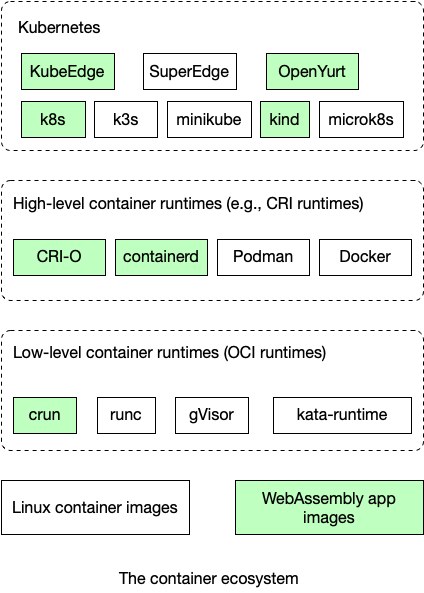

WasmEdge in Kubernetes

Developers can leverage container tools such as Kubernetes, Docker and CRI-O to deploy, manage, and run lightweight WebAssembly applications. In this chapter, we will demonstrate how Kubernetes ecosystem tools work with WasmEdge WebAssembly applications.

Compared with Linux containers, WebAssembly could be 100x faster at startup, have a much smaller memory and disk footprint, and have a better-defined safety sandbox. However, the trade-off is that WebAssembly requires its own language SDKs, and compiler toolchains, making it a more constrained developer environment than Linux containers. WebAssembly is increasingly used in Edge Computing scenarios where it is difficult to deploy Linux containers or when the application performance is vital.

One of the great advantages of Linux application containers is the rich ecosystem of tools. The good news is that you can use the exact same tools to manage WebAssembly applications, enabling Linux containers and WebAssembly apps to run side-by-side in the same system.

The contents of this chapter are organized by the approaches for integrating WasmEdge into container toolchains.

- The slimmed Linux container tailored for WasmEdge offers the easiest option (but with performance trade-offs) to integrate WasmEdge applications into any container tooling system.

- The most important integration approach is to replace the underlying OCI runtime of the toolchain stack with a WasmEdge-enabled

crunruntime.- Quick start provides simple and scripted tutorials to run WasmEdge-based applications as container images in Kubernetes.

- Demo apps discusses the two demo WasmEdge applications we will run in Kubernetes clusters. Those applications are compiled from Rust source code, packaged as OCI images, and uploaded to Docker Hub.

- Container runtimes covers how to configure low level container runtimes, such as crun, to load and run WebAssembly OCI images.

- CRI runtimes covers how to configure and use high level container runtimes, such as CRI-O and containerd, to load and run WebAssembly OCI images on top of low level container runtimes.

- Kubernetes covers how to configure and use Kubernetes and Kubernetes variations, such as KubeEdge and SuperEdge, to load and run WebAssembly OCI images on top of CRI runtimes.

- If you cannot replace the OCI runtime in your toolchain with WasmEdge-enabled

crun, you can use a containerd shim to start and run a WasmEdge application without any intrusive change.

The goal is to load and run WebAssembly OCI images side by side with Linux OCI images (e.g., today's Docker containers) across the entire Kubernetes stack.

Quick start

We have created Ubuntu-based scripts for you to quickly get started with the following combination of runtimes in a standard Kubernetes setup.

| CRI (high level) runtime | OCI (low level) runtime | |

|---|---|---|

| CRI-O | crun + WasmEdge | Script |

| containerd | crun + WasmEdge | Script |

CRI-O and crun

You can use the CRI-O install.sh script to install CRI-O and crun on Ubuntu 20.04.

wget -qO- https://raw.githubusercontent.com/second-state/wasmedge-containers-examples/main/crio/install.sh | bash

Next, install Kubernetes using the following script.

wget -qO- https://raw.githubusercontent.com/second-state/wasmedge-containers-examples/main/kubernetes_crio/install.sh | bash

The simple_wasi_application.sh script shows how to pull a WebAssembly application from Docker Hub, and then run it as a containerized application in Kubernetes.

wget -qO- https://raw.githubusercontent.com/second-state/wasmedge-containers-examples/main/kubernetes_crio/simple_wasi_application.sh | bash

You should see results from the WebAssembly program printed in the console log. Here is an example.

containerd and crun

You can use the containerd install.sh script to install containerd and crun on Ubuntu 20.04.

wget -qO- https://raw.githubusercontent.com/second-state/wasmedge-containers-examples/main/containerd/install.sh | bash

Next, install Kubernetes using the following script.

wget -qO- https://raw.githubusercontent.com/second-state/wasmedge-containers-examples/main/kubernetes_containerd/install.sh | bash

The simple_wasi_application.sh script shows how to pull a WebAssembly application from Docker Hub, and then run it as a containerized application in Kubernetes.

wget -qO- https://raw.githubusercontent.com/second-state/wasmedge-containers-examples/main/kubernetes_containerd/simple_wasi_application.sh | bash

You should see results from the WebAssembly program printed in the console log. Here is an example.

Read on to the rest of this chapter to learn how exactly those runtimes are configured.

Demo apps

In this chapter, we will cover two demo apps. We will build them from Rust source code, build OCI images around them, and then publish the images to Docker Hub.

If you have not done so, please

Next, explore the examples

Since we have already built and published those demo apps on Docker Hub, you could also just go straight to the container runtime sections to use these images.

A simple WebAssembly example

In this article, I will show you how to build a container image for a WebAssembly application. It can then be started and managed by Kubernetes ecosystem tools, such as CRI-O, Docker, crun, and Kubernetes.

Prerequisites

If you simply want a wasm bytecode file to test as a container image, you can skip the building process and just download the wasm file here.

If you have not done so already, follow these simple instructions to install Rust.

Download example code

git clone https://github.com/second-state/wasm-learning

cd wasm-learning/cli/wasi

Build the WASM bytecode

rustup target add wasm32-wasi

cargo build --target wasm32-wasi --release

The wasm bytecode application is in the target/wasm32-wasi/release/wasi_example_main.wasm file. You can now publish and use it as a container image.

Apply executable permission on the Wasm bytecode

chmod +x target/wasm32-wasi/release/wasi_example_main.wasm

Create Dockerfile

Create a file called Dockerfile in the target/wasm32-wasi/release/ folder with the following content:

FROM scratch

ADD wasi_example_main.wasm /

CMD ["/wasi_example_main.wasm"]

Create container image with annotations

Please note that adding self-defined annotation is still a new feature in buildah.

The crun container runtime can start the above WebAssembly-based container image. But it requires the module.wasm.image/variant=compat-smart annotation on the container image to indicate that it is a WebAssembly application without a guest OS. You can find the details in Official crun repo.

To add module.wasm.image/variant=compat-smart annotation in the container image, you will need the latest buildah. Currently, Docker does not support this feature. Please follow the install instructions of buildah to build the latest buildah binary.

Build and install the latest buildah on Ubuntu

On Ubuntu zesty and xenial, use these commands to prepare for buildah.

sudo apt-get -y install software-properties-common

export OS="xUbuntu_20.04"

sudo bash -c "echo \"deb https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/ /\" > /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list"

sudo bash -c "curl -L https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/Release.key | apt-key add -"

sudo add-apt-repository -y ppa:alexlarsson/flatpak

sudo apt-get -y -qq update

sudo apt-get -y install bats git libapparmor-dev libdevmapper-dev libglib2.0-dev libgpgme-dev libseccomp-dev libselinux1-dev skopeo-containers go-md2man containers-common

sudo apt-get -y install golang-1.16 make

Then, follow these steps to build and install buildah on Ubuntu.

mkdir -p ~/buildah

cd ~/buildah

export GOPATH=`pwd`

git clone https://github.com/containers/buildah ./src/github.com/containers/buildah

cd ./src/github.com/containers/buildah

PATH=/usr/lib/go-1.16/bin:$PATH make

cp bin/buildah /usr/bin/buildah

buildah --help

Create and publish a container image with buildah

In the target/wasm32-wasi/release/ folder, do the following.

$ sudo buildah build --annotation "module.wasm.image/variant=compat-smart" -t wasm-wasi-example .

# make sure docker is install and running

# systemctl status docker

# to make sure regular user can use docker

# sudo usermod -aG docker $USER

# newgrp docker

# You may need to use docker login to create the `~/.docker/config.json` for auth.

$ sudo buildah push --authfile ~/.docker/config.json wasm-wasi-example docker://docker.io/wasmedge/example-wasi:latest

That's it! Now you can try to run it in CRI-O or Kubernetes!

HTTP server example

This part has been moved to https://github.com/second-state/wasmedge-containers-examples/blob/main/http_server_wasi_app.md. Please use our new docs.

Container runtimes

The container image can be started by any OCI-compliant container runtime, such as

- crun: a high performance and lightweight container runtime written in C

- runc: a widely used container runtime written in Go

- youki: a OCI-compatible container runtime implementation written in Rust

crun

This part has been moved to https://wasmedge.org/docs/develop/deploy/oci-runtime/crun. Please use our new docs.

runc

Coming soon, or you can help out

youki

This part has been moved to https://wasmedge.org/docs/develop/deploy/oci-runtime/youki. Please use our new docs.

CRI runtimes

The high-level container runtime, such as CRI-O and containerd, pulls container images from registries (e.g., Docker Hub), manages them on disk, and launches a lower-level runtime to run container processes. From this chapter, you can check out specific tutorials for CRI-O and containerd.

CRI-O

This docs has moved to https://wasmedge.org/docs/develop/deploy/cri-runtime/cri-o-crun. Please use our new docs.

containerd

This docs has moved to https://wasmedge.org/docs/develop/deploy/cri-runtime/containerd-crun. Please use our new docs.

Kubernetes

Most high-level container runtimes implement Kubernetes' CRI (Container Runtime Interface) spec so that they can be managed by Kubernetes tools. That means you can use Kubernetes tools to manage the WebAssembly app image in pods and namespaces. Check out specific instructions for different flavors of Kubernetes setup in this chapter.

Kubernetes + CRI-O

This doc has move to https://wasmedge.org/docs/develop/deploy/kubernetes/kubernetes-cri-o. Please use our new docs.

Kubernetes + containerd

This part has been moved to https://wasmedge.org/docs/develop/deploy/kubernetes/kubernetes-containerd-crun. Please use or new docs

Kubernetes in Docker (KinD)

This part has been moved to https://wasmedge.org/docs/develop/deploy/kubernetes/kind. Please use our new docs.

Create a crun demo for KubeEdge

This docs has moved to https://wasmedge.org/docs/develop/deploy/kubernetes/kubedge. Please use our new docs.

SuperEdge

This part has been moved to https://wasmedge.org/docs/develop/deploy/kubernetes/superedge. Please use our new docs.

OpenYurt + containerd + crun

This part has been moved to https://wasmedge.org/docs/develop/deploy/kubernetes/openyurt. Please use our new docs.

Knative

This part has been moved to https://wasmedge.org/docs/develop/deploy/kubernetes/knative. Please use our new docs.

Kwasm

Running WasmEdge apps with the Docker CLI

The Docker CLI is a very popular developer tool. However, it is not easy to replace Docker's underlying OCI runtime (runc) with the WasmEdge-enabled crun. In this section, we will discuss two ways to run WasmEdge applications in Docker.

Use the slim Linux container

This part has been moved to https://wasmedge.org/docs/develop/deploy/using-wasmedge-in-docker. Please use our new docs.

Use the containerd shim

As we discussed, wrapping WebAssembly inside a Docker Linux container results in performance and security penalties. However, we cannot easily replace the OCI runtime (runc) in the Docker toolchain as well. In this chapter, we will discuss another approach to start and run WebAssembly bytecode applications directly from the Docker CLI.

Coming soon

App Frameworks and Platforms

WasmEdge applications can be plugged into existing application frameworks or platforms. WasmEdge provides a safe and efficient extension mechanism for those frameworks.

In this chapter, we will introduce several such frameworks and platforms.

- Service mesh and frameworks support WasmEdge to run as containers for microservices. We will cover distributed application framework Dapr, service mesh MOSN, and event mesh Apache EventMesh.

- Application frameworks support WasmEdge as an embedded function or plug-in runtime. We will cover streaming data framework YoMo and Go function schedulder / framework Reactr.

- Serverless platforms allows WasmEdge programs to run as serverless functions in their infrastructure. We will cover AWS Lambda, Tencent Serverless Cloud Functions, Vercel Serverless Functions, Netlify Functions, and Second State Functions.

Service mesh and distributed runtimes

This part has been moved to https://wasmedge.org/docs/category/use-cases. Please use our new docs.

Dapr

In this article, I will demonstrate how to use WasmEdge as a sidecar application runtime for Dapr. There are two ways to do this:

- Standalone WasmEdge is the recommended approach is to write a microservice using Rust or JavaScript, and run it in WasmEdge. The WasmEdge application serves web requests and communicates with the sidecar via sockets using the Dapr API. In this case, we can run WasmEdge as a managed container in k8s.

- Alternatively, Embedded WasmEdge is to create a simple microservice in Rust or Go to listen for web requests and communicate with the Dapr sidecar. It passes the request data to a WasmEdge runtime for processing. The business logic of the microservice is a WebAssembly function created and deployed by an application developer.

While the first approach (running the entire microservice in WasmEdge) is much preferred, we are still working on a fully fledged Dapr SDKs for WasmEdge. You can track their progress in GitHub issues -- Rust and JavaScript.

Quick start

First you need to install Dapr and WasmEdge. Go and Rust are optional for the standalone WasmEdge approach. However, they are required for the demo app since it showcases both standalone and embedded WasmEdge approaches.

Fork or clone the demo application from Github. You can use this repo as your own application template.

git clone https://github.com/second-state/dapr-wasm

The demo has 4 Dapr sidecar applications. The web-port project provides a public web service for a static HTML page. This is the application’s UI. From the static HTML page, the user can select a microservice to turn an input image into grayscale. All 3 microsoervices below perform the same function. They are just implemented using different approaches.

- Standalone WasmEdge approach: The image-api-wasi-socket-rs project provides a standalone WasmEdge sidecar microservice that takes the input image and returns the grayscale image. The microservice is written in Rust and compiled into WebAssembly bytecode to run in WasmEdge.

- Embedded WasmEdge approach #1: The image-api-rs project provides a simple Rust-based microservice. It embeds a WasmEdge function to turn an input image into a grayscale image.

- Embedded WasmEdge approach #2: The image-api-go project provides a simple Go-based microservice. It embeds a WasmEdge function to turn an input image into a grayscale image.

You can follow the instructions in the README to start the sidecar services. Here are commands to build the WebAssembly functions and start the sidecar services. The first set of commands deploy the static web page service and the standalone WasmEdge service written in Rust. It forms a complete application to turn an input image into grayscale.

# Build and start the static HTML web page service for the UI and router for sending the uploaded image to the grayscale microservice

cd web-port

go build

./run_web.sh

cd ../

# Build the standalone image grayscale web service for WasmEdge

cd image-api-wasi-socket-rs

cargo build --target wasm32-wasi

cd ../

# Run the microservice as a Dapr sidecar app

cd image-api-wasi-socket-rs

./run_api_wasi_socket_rs.sh

cd ../

The second set of commands create the alternative microservices for the embedded WasmEdge function.

# Build the grayscale WebAssembly functions, and deploy them to the sidecar projects

cd functions/grayscale

./build.sh

cd ../../

# Build and start the Rust-based microservice for embedding the grayscale WasmEdge function

cd image-api-rs

cargo build --release

./run_api_rs.sh

cd ../

# Build and start the Go-based microservice for embedding the grayscale WasmEdge function

cd image-api-go

go build

./run_api_go.sh

cd ../

Finally, you should be able to see the web UI in your browser.

Recommended: The standalone WasmEdge microservice in Rust

The standalone WasmEdge microservice starts a non-blocking TCP server inside WasmEdge. The TCP server passes incoming requests to handle_client(), which passes HTTP requests to handle_http(), which calls grayscale() to process the image data in the request.

fn main() -> std::io::Result<()> { let port = std::env::var("PORT").unwrap_or(9005.to_string()); println!("new connection at {}", port); let listener = TcpListener::bind(format!("127.0.0.1:{}", port))?; loop { let _ = handle_client(listener.accept()?.0); } } fn handle_client(mut stream: TcpStream) -> std::io::Result<()> { ... ... } fn handle_http(req: Request<Vec<u8>>) -> bytecodec::Result<Response<String>> { ... ... } fn grayscale(image: &[u8]) -> Vec<u8> { let detected = image::guess_format(&image); let mut buf = vec![]; if detected.is_err() { return buf; } let image_format_detected = detected.unwrap(); let img = image::load_from_memory(&image).unwrap(); let filtered = img.grayscale(); match image_format_detected { ImageFormat::Gif => { filtered.write_to(&mut buf, ImageOutputFormat::Gif).unwrap(); } _ => { filtered.write_to(&mut buf, ImageOutputFormat::Png).unwrap(); } }; return buf; }

Work in progress: It will soon interact with the Dapr sidecar through the WasmEdge Dapr SDK in Rust.

Now, you can build the microservice. It is a simple matter of compiling from Rust to WebAssembly.

cd image-api-wasi-socket-rs

cargo build --target wasm32-wasi

Deploy the WasmEdge microservice in Dapr as follows.

dapr run --app-id image-api-wasi-socket-rs \

--app-protocol http \

--app-port 9005 \

--dapr-http-port 3503 \

--components-path ../config \

--log-level debug \

wasmedge ./target/wasm32-wasi/debug/image-api-wasi-socket-rs.wasm

Alternative: The embedded WasmEdge microservices

The embedded WasmEdge approach requires us to create a WebAssembly function for the business logic (image processing) first, and then embed it into simple Dapr microservices.

Rust function for image processing

The Rust function is simple. It uses the wasmedge_bindgen macro to makes it easy to call the function from a Go or Rust host embedding the WebAssembly function. It takes and returns base64 encoded image data for the web.

#![allow(unused)] fn main() { #[wasmedge_bindgen] pub fn grayscale(image_data: String) -> String { let image_bytes = image_data.split(",").map(|x| x.parse::<u8>().unwrap()).collect::<Vec<u8>>(); return grayscale::grayscale_internal(&image_bytes); } }

The Rust function that actually performs the task is as follows.

#![allow(unused)] fn main() { pub fn grayscale_internal(image_data: &[u8]) -> String { let image_format_detected: ImageFormat = image::guess_format(&image_data).unwrap(); let img = image::load_from_memory(&image_data).unwrap(); let filtered = img.grayscale(); let mut buf = vec![]; match image_format_detected { ImageFormat::Gif => { filtered.write_to(&mut buf, ImageOutputFormat::Gif).unwrap(); } _ => { filtered.write_to(&mut buf, ImageOutputFormat::Png).unwrap(); } }; let mut base64_encoded = String::new(); base64::encode_config_buf(&buf, base64::STANDARD, &mut base64_encoded); return base64_encoded.to_string(); } }

The Go host wrapper for microservice

The Go-based microservice embeds the above imaging processing function in WasmEdge. The microservice itself is a web server and utilizes the Dapr Go SDK.

func main() {

s := daprd.NewService(":9003")

if err := s.AddServiceInvocationHandler("/api/image", imageHandlerWASI); err != nil {

log.Fatalf("error adding invocation handler: %v", err)

}

if err := s.Start(); err != nil && err != http.ErrServerClosed {

log.Fatalf("error listening: %v", err)

}

}

The imageHandlerWASI() function starts a WasmEdge instance and calls the image processing (grayscale) function in it via wasmedge_bindgen.

Build and deploy the Go microservice to Dapr as follows.

cd image-api-go

go build

dapr run --app-id image-api-go \

--app-protocol http \

--app-port 9003 \

--dapr-http-port 3501 \

--log-level debug \

--components-path ../config \

./image-api-go

The Rust host wrapper for microservice

The Rust-based microservice embeds the above imaging processing function in WasmEdge. The microservice itself is a Tokio and Warp based web server.

#![allow(unused)] fn main() { #[tokio::main] pub async fn run_server(port: u16) { pretty_env_logger::init(); let home = warp::get().map(warp::reply); let image = warp::post() .and(warp::path("api")) .and(warp::path("image")) .and(warp::body::bytes()) .map(|bytes: bytes::Bytes| { let v: Vec<u8> = bytes.iter().map(|&x| x).collect(); let res = image_process_wasmedge_sys(&v); let _encoded = base64::encode(&res); Response::builder() .header("content-type", "image/png") .body(res) }); let routes = home.or(image); let routes = routes.with(warp::cors().allow_any_origin()); let log = warp::log("dapr_wasm"); let routes = routes.with(log); warp::serve(routes).run((Ipv4Addr::UNSPECIFIED, port)).await } }

The image_process_wasmedge_sys() function starts a WasmEdge instance and calls the image processing (grayscale) function in it via wasmedge_bindgen.

Build and deploy the Rust microservice to Dapr as follows.

cd image-api-rs

cargo build --release

dapr stop image-api-rs

# Change this to your own path for WasmEdge

export LD_LIBRARY_PATH=/home/coder/.wasmedge/lib64/

dapr run --app-id image-api-rs \

--app-protocol http \

--app-port 9004 \

--dapr-http-port 3502 \

--components-path ../config \

--log-level debug \

./target/release/image-api-rs

That's it! Let us know your cool Dapr microservices in WebAssembly!

MOSN

Coming soon.

wasm-nginx-module

This part has been moved to https://wasmedge.org/docs/embed/use-case/wasm-nginx. Please use our new docs.

Apache EventMesh

Coming soon, or you can help out

App frameworks

This part has been moved to https://wasmedge.org/docs/category/use-cases. Please use our new docs.

YoMo

YoMo is a programming framework enabling developers to build a distributed cloud system (Geo-Distributed Cloud System). YoMo's communication layer is made on top of the QUIC protocol, which brings high-speed data transmission. In addition, it has a built-in Streaming Serverless "streaming function", which significantly improves the development experience of distributed cloud systems. The distributed cloud system built by YoMo provides an ultra-high-speed communication mechanism between near-field computing power and terminals. It has a wide range of use cases in Metaverse, VR/AR, IoT, etc.

YoMo is written in the Go language. For streaming Serverless, Golang plugins and shared libraries are used to load users' code dynamically, which also have certain limitations for developers. Coupled with Serverless architecture's rigid demand for isolation, this makes WebAssembly an excellent choice for running user-defined functions.

For example, in the process of real-time AI inference in AR/VR devices or smart factories, the camera sends real-time unstructured data to the computing node in the near-field MEC (multi-access edge computing) device through YoMo. YoMo sends the AI computing result to the end device in real-time when the AI inference is completed. Thus, the hosted AI inference function will be automatically executed.

However, a challenge for YoMo is to incorporate and manage handler functions written by multiple outside developers in an edge computing node. It requires runtime isolation for those functions without sacrificing performance. Traditional software container solutions, such as Docker, are not up to the task. They are too heavy and slow to handle real-time tasks.

WebAssembly provides a lightweight and high-performance software container. It is ideally suited as a runtime for YoMo’s data processing handler functions.

In this article, we will show you how to create a Rust function for Tensorflow-based image classification, compile it into WebAssembly, and then use YoMo to run it as a stream data handler. We use WasmEdge as our WebAssembly runtime because it offers the highest performance and flexibility compared with other WebAssembly runtimes. It is the only WebAssembly VM that reliably supports Tensorflow. YoMo manages WasmEdge VM instances and the contained WebAssembly bytecode apps through WasmEdge’s Golang API.

Source code: https://github.com/yomorun/yomo-wasmedge-tensorflow

Checkout the WasmEdge image classification function in action in YoMo

Prerequisite

Obviously, you will need to have Golang installed, but I will assume you already did.

Golang version should be newer than 1.15 for our example to work.

You also need to install the YoMo CLI application. It orchestrates and coordinates data streaming and handler function invocations.

$ go install github.com/yomorun/cli/yomo@latest

$ yomo version

YoMo CLI version: v0.1.3

Next, please install the WasmEdge and its Tensorflow shared libraries. WasmEdge is a leading WebAssembly runtime hosted by the CNCF. We will use it to embed and run WebAssembly programs from YoMo.

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install.sh | bash

Finally, since our demo WebAssembly functions are written in Rust, you will also need a Rust compiler.

For the rest of the demo, fork and clone the source code repository.

git clone https://github.com/yomorun/yomo-wasmedge-tensorflow.git

The image classification function

The image classification function to process the YoMo image stream is written in Rust. It utilizes the WasmEdge Tensorflow API to process an input image.

#![allow(unused)] fn main() { #[wasmedge_bindgen] pub fn infer(image_data: Vec<u8>) -> Result<Vec<u8>, String> { let start = Instant::now(); // Load the TFLite model and its meta data (the text label for each recognized object number) let model_data: &[u8] = include_bytes!("lite-model_aiy_vision_classifier_food_V1_1.tflite"); let labels = include_str!("aiy_food_V1_labelmap.txt"); // Pre-process the image to a format that can be used by this model let flat_img = wasmedge_tensorflow_interface::load_jpg_image_to_rgb8(&image_data[..], 192, 192); println!("RUST: Loaded image in ... {:?}", start.elapsed()); // Run the TFLite model using the WasmEdge Tensorflow API let mut session = wasmedge_tensorflow_interface::Session::new(&model_data, wasmedge_tensorflow_interface::ModelType::TensorFlowLite); session.add_input("input", &flat_img, &[1, 192, 192, 3]) .run(); let res_vec: Vec<u8> = session.get_output("MobilenetV1/Predictions/Softmax"); // Find the object index in res_vec that has the greatest probability // Translate the probability into a confidence level // Translate the object index into a label from the model meta data food_name let mut i = 0; let mut max_index: i32 = -1; let mut max_value: u8 = 0; while i < res_vec.len() { let cur = res_vec[i]; if cur > max_value { max_value = cur; max_index = i as i32; } i += 1; } println!("RUST: index {}, prob {}", max_index, max_value); let confidence: String; if max_value > 200 { confidence = "is very likely".to_string(); } else if max_value > 125 { confidence = "is likely".to_string(); } else { confidence = "could be".to_string(); } let ret_str: String; if max_value > 50 { let mut label_lines = labels.lines(); for _i in 0..max_index { label_lines.next(); } let food_name = label_lines.next().unwrap().to_string(); ret_str = format!( "It {} a <a href='https://www.google.com/search?q={}'>{}</a> in the picture", confidence, food_name, food_name ); } else { ret_str = "It does not appears to be a food item in the picture.".to_string(); } println!( "RUST: Finished post-processing in ... {:?}", start.elapsed() ); return Ok(ret_str.as_bytes().to_vec()); } }

You should add wasm32-wasi target to rust to compile this function into WebAssembly bytecode.

rustup target add wasm32-wasi

cd flow/rust_mobilenet_food

cargo build --target wasm32-wasi --release

# The output WASM will be target/wasm32-wasi/release/rust_mobilenet_food_lib.wasm

# Copy the wasm bytecode file to the flow/ directory

cp target/wasm32-wasi/release/rust_mobilenet_food_lib.wasm ../

To release the best performance of WasmEdge, you should enable the AOT mode by compiling the .wasm file to the .so.

wasmedge compile rust_mobilenet_food_lib.wasm rust_mobilenet_food_lib.so

Integration with YoMo

On the YoMo side, we use the WasmEdge Golang API to start and run WasmEdge VM for the image classification function. The app.go file in the source code project is as follows.

package main

import (

"crypto/sha1"

"fmt"

"log"

"os"

"sync/atomic"

"github.com/second-state/WasmEdge-go/wasmedge"

bindgen "github.com/second-state/wasmedge-bindgen/host/go"

"github.com/yomorun/yomo"

)

var (

counter uint64

)

const ImageDataKey = 0x10

func main() {

// Connect to Zipper service

sfn := yomo.NewStreamFunction("image-recognition", yomo.WithZipperAddr("localhost:9900"))

defer sfn.Close()

// set only monitoring data

sfn.SetObserveDataID(ImageDataKey)

// set handler

sfn.SetHandler(Handler)

// start

err := sfn.Connect()

if err != nil {

log.Print("❌ Connect to zipper failure: ", err)

os.Exit(1)

}

select {}

}

// Handler process the data in the stream

func Handler(img []byte) (byte, []byte) {

// Initialize WasmEdge's VM

vmConf, vm := initVM()

bg := bindgen.Instantiate(vm)

defer bg.Release()

defer vm.Release()

defer vmConf.Release()

// recognize the image

res, err := bg.Execute("infer", img)

if err == nil {

fmt.Println("GO: Run bindgen -- infer:", string(res))

} else {

fmt.Println("GO: Run bindgen -- infer FAILED")

}

// print logs

hash := genSha1(img)

log.Printf("✅ received image-%d hash %v, img_size=%d \n", atomic.AddUint64(&counter, 1), hash, len(img))

return 0x11, nil

}

// genSha1 generate the hash value of the image

func genSha1(buf []byte) string {

h := sha1.New()

h.Write(buf)

return fmt.Sprintf("%x", h.Sum(nil))

}

// initVM initialize WasmEdge's VM

func initVM() (*wasmedge.Configure, *wasmedge.VM) {

wasmedge.SetLogErrorLevel()

// Set Tensorflow not to print debug info

os.Setenv("TF_CPP_MIN_LOG_LEVEL", "3")

os.Setenv("TF_CPP_MIN_VLOG_LEVEL", "3")

// Create configure

vmConf := wasmedge.NewConfigure(wasmedge.WASI)

// Create VM with configure

vm := wasmedge.NewVMWithConfig(vmConf)

// Init WASI

var wasi = vm.GetImportObject(wasmedge.WASI)

wasi.InitWasi(

os.Args[1:], // The args

os.Environ(), // The envs

[]string{".:."}, // The mapping directories

)

// Register WasmEdge-tensorflow and WasmEdge-image

var tfobj = wasmedge.NewTensorflowImportObject()

var tfliteobj = wasmedge.NewTensorflowLiteImportObject()

vm.RegisterImport(tfobj)

vm.RegisterImport(tfliteobj)

var imgobj = wasmedge.NewImageImportObject()

vm.RegisterImport(imgobj)

// Instantiate wasm

vm.LoadWasmFile("rust_mobilenet_food_lib.so")

vm.Validate()

return vmConf, vm

}

In action

Finally, we can start YoMo and see the entire data processing pipeline in action. Start the YoMo CLI application from the project folder. The yaml file defines port YoMo should listen on and the workflow handler to trigger for incoming data. Note that the flow name image-recognition matches the name in the aforementioned data handler app.go.

yomo serve -c ./zipper/workflow.yaml

Start the handler function by running the aforementioned app.go program.

cd flow

go run --tags "tensorflow image" app.go

Start a simulated data source by sending a video to YoMo. The video is a series of image frames. The WasmEdge function in app.go will be invoked against every image frame in the video.

# Download a video file

wget -P source 'https://github.com/yomorun/yomo-wasmedge-tensorflow/releases/download/v0.1.0/hot-dog.mp4'

# Stream the video to YoMo

go run ./source/main.go ./source/hot-dog.mp4

You can see the output from the WasmEdge handler function in the console. It prints the names of the objects detected in each image frame in the video.

What's next

In this article, we have seen how to use the WasmEdge Tensorflow API and Golang SDK in YoMo framework to process an image stream in near real-time.

In collaboration with YoMo, we will soon deploy WasmEdge in production in smart factories for a variety of assembly line tasks. WasmEdge is the software runtime for edge computing!

Reactr

Reactr is a fast, performant function scheduling library written in Go. Reactr is designed to be flexible, with the ability to run embedded in your Go applications and first-class support for WebAssembly. Taking advantage of Go's superior concurrency capabilities, Reactr can manage and execute hundreds of WebAssembly runtime instances all at once, making a great framework for server-side applications.

Reactr allows you to run WebAssembly functions in Go, so does the WasmEdge Go SDK. The unique feature of Reactr is that it provides a rich set of host functions in Go, which support access to networks and databases etc. Reactr then provides Rust (and Swift / AssemblyScript) APIs to call those host functions from within the WebAssembly function.

In this article, we will show you how to use WasmEdge together with Reactr to take advantage of the best of both worlds. WasmEdge is the fastest and most extensible WebAssembly runtime. It is also the fastest in Reactr's official test suite. We will show you how to run Rust functions compiled to WebAssembly as well as JavaScript programs in WasmEdge and Reactr.

WasmEdge provides advanced support for JavaScript including mixing Rust with JavaScript for improved performance.

Prerequisites

You need have Rust, Go, and WasmEdge installed on your system.

The GCC compiler (installed via the build-essential package) is also needed for WasmEdge.

sudo apt-get update

sudo apt-get -y upgrade

sudo apt install build-essential

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

source $HOME/.cargo/env

rustup target add wasm32-wasi

curl -OL https://golang.org/dl/go1.17.5.linux-amd64.tar.gz

sudo tar -C /usr/local -xvf go1.17.5.linux-amd64.tar.gz

export PATH=$PATH:/usr/local/go/bin

wget -qO- https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install.sh | bash

source $HOME/.wasmedge/env

Hello world

A simple hello world example for Reactr is available here.

Hello world: Rust function compiled to WebAssembly

Let's first create a simple Rust function to echo hello.

The Rust function HelloEcho::run() is as follows. It will be exposed to the Go host application through Reactr.

#![allow(unused)] fn main() { use suborbital::runnable::*; struct HelloEcho{} impl Runnable for HelloEcho { fn run(&self, input: Vec<u8>) -> Result<Vec<u8>, RunErr> { let in_string = String::from_utf8(input).unwrap(); Ok(format!("hello {}", in_string).as_bytes().to_vec()) } } }

Let's build the Rust function into a WebAssembly bytecode file.

cd hello-echo

cargo build --target wasm32-wasi --release

cp target/wasm32-wasi/release/hello_echo.wasm ..

cd ..

Hello world: Go host application

Next, lets look into the Go host app that executes the WebAssembly functions.

The runBundle() function executes the run() function in the Runnable struct once.

func runBundle() {

r := rt.New()

doWasm := r.Register("hello-echo", rwasm.NewRunner("./hello_echo.wasm"))

res, err := doWasm([]byte("wasmWorker!")).Then()

if err != nil {

fmt.Println(err)

return

}

fmt.Println(string(res.([]byte)))

}

The runGroup() function executes the Rust-compiled WebAssembly run() function multiple times asynchronously in a group, and receives the results as they come in.

func runGroup() {

r := rt.New()

doWasm := r.Register("hello-echo", rwasm.NewRunner("./hello_echo.wasm"))

grp := rt.NewGroup()

for i := 0; i < 100000; i++ {

grp.Add(doWasm([]byte(fmt.Sprintf("world %d", i))))

}

if err := grp.Wait(); err != nil {

fmt.Println(err)

}

}

Finally, let's run the Go host application and see the results printed to the console.

You must use the

-tags wasmedgeflag to take advantage of the performance and extended WebAssembly APIs provided by WasmEdge.

go mod tidy

go run -tags wasmedge main.go

Database query

In this example, we will demonstrate how to use Reactr host functions and APIs to query a PostgreSQL database from your WebAssembly function.

Database query: Install and set up a PostgreSQL database

We will start a PostgreSQL instance through Docker.

docker pull postgres

docker run --name reactr-postgres -p 5432:5432 -e POSTGRES_PASSWORD=12345 -d postgres

Next, let's create a database and populate it with some sample data.

$ docker run -it --rm --network host postgres psql -h 127.0.0.1 -U postgres

postgres=# CREATE DATABASE reactr;

postgres=# \c reactr;

# Create a table:

postgres=# CREATE TABLE users (

uuid varchar(100) CONSTRAINT firstkey PRIMARY KEY,

email varchar(50) NOT NULL,

created_at date,

state char(1),

identifier integer

);

Leave this running and start another terminal window to interact with this PostgreSQL server.

Database query: Rust function compiled to WebAssembly

Let's create a Rust function to access the PostgreSQL database.

The Rust function RsDbtest::run() is as follows. It will be exposed to the Go host application through Reactr. It uses named queries such as PGInsertUser and PGSelectUserWithUUID to operate the database. Those queries are defined in the Go host application, and we will see them later.

#![allow(unused)] fn main() { use suborbital::runnable::*; use suborbital::db; use suborbital::util; use suborbital::db::query; use suborbital::log; use uuid::Uuid; struct RsDbtest{} impl Runnable for RsDbtest { fn run(&self, _: Vec<u8>) -> Result<Vec<u8>, RunErr> { let uuid = Uuid::new_v4().to_string(); let mut args: Vec<query::QueryArg> = Vec::new(); args.push(query::QueryArg::new("uuid", uuid.as_str())); args.push(query::QueryArg::new("email", "connor@suborbital.dev")); match db::insert("PGInsertUser", args) { Ok(_) => log::info("insert successful"), Err(e) => { return Err(RunErr::new(500, e.message.as_str())) } }; let mut args2: Vec<query::QueryArg> = Vec::new(); args2.push(query::QueryArg::new("uuid", uuid.as_str())); match db::update("PGUpdateUserWithUUID", args2.clone()) { Ok(rows) => log::info(format!("update: {}", util::to_string(rows).as_str()).as_str()), Err(e) => { return Err(RunErr::new(500, e.message.as_str())) } } match db::select("PGSelectUserWithUUID", args2.clone()) { Ok(result) => log::info(format!("select: {}", util::to_string(result).as_str()).as_str()), Err(e) => { return Err(RunErr::new(500, e.message.as_str())) } } match db::delete("PGDeleteUserWithUUID", args2.clone()) { Ok(rows) => log::info(format!("delete: {}", util::to_string(rows).as_str()).as_str()), Err(e) => { return Err(RunErr::new(500, e.message.as_str())) } } ... ... } } }

Let's build the Rust function into a WebAssembly bytecode file.

cd rs-db

cargo build --target wasm32-wasi --release

cp target/wasm32-wasi/release/rs_db.wasm ..

cd ..

Database query: Go host application

The Go host app first defines the SQL queries and gives each of them a name. We will then pass those queries to the Reactr runtime as a configuration.

func main() {

dbConnString, exists := os.LookupEnv("REACTR_DB_CONN_STRING")

if !exists {

fmt.Println("skipping as conn string env var not set")

return

}

q1 := rcap.Query{

Type: rcap.QueryTypeInsert,

Name: "PGInsertUser",

VarCount: 2,

Query: `

INSERT INTO users (uuid, email, created_at, state, identifier)

VALUES ($1, $2, NOW(), 'A', 12345)`,

}

q2 := rcap.Query{

Type: rcap.QueryTypeSelect,

Name: "PGSelectUserWithUUID",

VarCount: 1,

Query: `

SELECT * FROM users

WHERE uuid = $1`,

}

q3 := rcap.Query{

Type: rcap.QueryTypeUpdate,

Name: "PGUpdateUserWithUUID",

VarCount: 1,

Query: `

UPDATE users SET state='B' WHERE uuid = $1`,

}

q4 := rcap.Query{

Type: rcap.QueryTypeDelete,

Name: "PGDeleteUserWithUUID",

VarCount: 1,

Query: `

DELETE FROM users WHERE uuid = $1`,

}

config := rcap.DefaultConfigWithDB(vlog.Default(), rcap.DBTypePostgres, dbConnString, []rcap.Query{q1, q2, q3, q4})

r, err := rt.NewWithConfig(config)

if err != nil {

fmt.Println(err)

return

}

... ...

}

Then, we can run the WebAssembly function from Reactr.

func main() {

... ...

doWasm := r.Register("rs-db", rwasm.NewRunner("./rs_db.wasm"))

res, err := doWasm(nil).Then()

if err != nil {

fmt.Println(err)

return

}

fmt.Println(string(res.([]byte)))

}

Finally, let's run the Go host application and see the results printed to the console.

You must use the

-tags wasmedgeflag to take advantage of the performance and extended WebAssembly APIs provided by WasmEdge.

export REACTR_DB_CONN_STRING='postgresql://postgres:12345@127.0.0.1:5432/reactr'

go mod tidy

go run -tags wasmedge main.go

Embed JavaScript in Go

As we mentioned, a key feature of the WasmEdge Runtime is its advanced JavaScript support, which allows JavaScript programs to run in lightweight, high-performance, safe, multi-language, and Kubernetes-managed WasmEdge containers. A simple example of embedded JavaScript function in Reactr is available here.

JavaScript example

The JavaScript example function is very simple. It just returns a string value.

let h = 'hello';

let w = 'wasmedge';

`${h} ${w}`;

JavaScript example: Go host application

The Go host app uses the Reactr API to run WasmEdge's standard JavaScript interpreter rs_embed_js.wasm. You can build your own version of JavaScript interpreter by modifying this Rust project.

Learn more about how to embed JavaScript code in Rust, and how to use Rust to implement JavaScript APIs in WasmEdge.

The Go host application just need to start the job for rs_embed_js.wasm and pass the JavaScript content to it. The Go application can then capture and print the return value from JavaScript.

func main() {

r := rt.New()

doWasm := r.Register("hello-quickjs", rwasm.NewRunner("./rs_embed_js.wasm"))

code, err := ioutil.ReadFile(os.Args[1])

if err != nil {

fmt.Print(err)

}

res, err := doWasm(code).Then()

if err != nil {

fmt.Println(err)

return

}

fmt.Println(string(res.([]byte)))

}

Run the Go host application as follows.

$ cd quickjs

$ go mod tidy

$ go run -tags wasmedge main.go hello.js

String(JsString(hello wasmedge))

The printed result shows the type information of the string in Rust and Go APIs. You can strip out this information by changing the Rust or Go applications.

JavaScript example: Feature examples

WasmEdge supports many advanced JavaScript features. For the next step, you could try our React SSR example to generate an HTML UI from a Reactr function!

You can just build the dist/main.js from the React SSR example, and copy it over to this example folder to see it in action!

$ cd quickjs

# copy over the dist/main.js file from the react ssr example

$ go mod tidy

$ go run -tags wasmedge main.go main.js

<div data-reactroot=""><div>This is home</div><div><div>This is page</div></div></div>

UnDefined

Serverless platforms

This part has been moved to https://wasmedge.org/docs/category/use-cases. Please use our new docs.

Rust and WebAssembly Serverless functions in Vercel

In this article, we will show you two serverless functions in Rust and WasmEdge deployed on Vercel. One is the image processing function, the other one is the TensorFlow inference function.

For more insights on why WasmEdge on Vercel, please refer to the article Rust and WebAssembly Serverless Functions in Vercel.

Prerequisite

Since our demo WebAssembly functions are written in Rust, you will need a Rust compiler. Make sure that you install the wasm32-wasi compiler target as follows, in order to generate WebAssembly bytecode.

rustup target add wasm32-wasi

The demo application front end is written in Next.js, and deployed on Vercel. We will assume that you already have the basic knowledge of how to work with Vercel.

Example 1: Image processing

Our first demo application allows users to upload an image and then invoke a serverless function to turn it into black and white. A live demo deployed on Vercel is available.

Fork the demo application’s GitHub repo to get started. To deploy the application on Vercel, just import the Github repo from Vercel for Github web page.

This repo is a standard Next.js application for the Vercel platform. The backend serverless function is in the api/functions/image_grayscale folder. The src/main.rs file contains the Rust program’s source code. The Rust program reads image data from the STDIN, and then outputs the black-white image to the STDOUT.

use hex; use std::io::{self, Read}; use image::{ImageOutputFormat, ImageFormat}; fn main() { let mut buf = Vec::new(); io::stdin().read_to_end(&mut buf).unwrap(); let image_format_detected: ImageFormat = image::guess_format(&buf).unwrap(); let img = image::load_from_memory(&buf).unwrap(); let filtered = img.grayscale(); let mut buf = vec![]; match image_format_detected { ImageFormat::Gif => { filtered.write_to(&mut buf, ImageOutputFormat::Gif).unwrap(); }, _ => { filtered.write_to(&mut buf, ImageOutputFormat::Png).unwrap(); }, }; io::stdout().write_all(&buf).unwrap(); io::stdout().flush().unwrap(); }

You can use Rust’s cargo tool to build the Rust program into WebAssembly bytecode or native code.

cd api/functions/image-grayscale/

cargo build --release --target wasm32-wasi

Copy the build artifacts to the api folder.

cp target/wasm32-wasi/release/grayscale.wasm ../../

Vercel runs

api/pre.shupon setting up the serverless environment. It installs the WasmEdge runtime, and then compiles each WebAssembly bytecode program into a nativesolibrary for faster execution.

The api/hello.js file conforms Vercel serverless specification. It loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and passes the uploaded image data via STDIN. Notice api/hello.js runs the compiled grayscale.so file generated by api/pre.sh for better performance.

const fs = require('fs');

const { spawn } = require('child_process');

const path = require('path');

module.exports = (req, res) => {

const wasmedge = spawn(

path.join(__dirname, 'wasmedge'),

[path.join(__dirname, 'grayscale.so')]);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

let buf = Buffer.concat(d);

res.setHeader('Content-Type', req.headers['image-type']);

res.send(buf);

});

wasmedge.stdin.write(req.body);

wasmedge.stdin.end('');

}

That's it. Deploy the repo to Vercel and you now have a Vercel Jamstack app with a high-performance Rust and WebAssembly based serverless backend.

Example 2: AI inference

The second demo application allows users to upload an image and then invoke a serverless function to classify the main subject on the image.

It is in the same GitHub repo as the previous example but in the tensorflow branch. Note: when you import this GitHub repo on the Vercel website, it will create a preview URL for each branch. The tensorflow branch would have its own deployment URL.

The backend serverless function for image classification is in the api/functions/image-classification folder in the tensorflow branch. The src/main.rs file contains the Rust program’s source code. The Rust program reads image data from the STDIN, and then outputs the text output to the STDOUT. It utilizes the WasmEdge Tensorflow API to run the AI inference.

pub fn main() { // Step 1: Load the TFLite model let model_data: &[u8] = include_bytes!("models/mobilenet_v1_1.0_224/mobilenet_v1_1.0_224_quant.tflite"); let labels = include_str!("models/mobilenet_v1_1.0_224/labels_mobilenet_quant_v1_224.txt"); // Step 2: Read image from STDIN let mut buf = Vec::new(); io::stdin().read_to_end(&mut buf).unwrap(); // Step 3: Resize the input image for the tensorflow model let flat_img = wasmedge_tensorflow_interface::load_jpg_image_to_rgb8(&buf, 224, 224); // Step 4: AI inference let mut session = wasmedge_tensorflow_interface::Session::new(&model_data, wasmedge_tensorflow_interface::ModelType::TensorFlowLite); session.add_input("input", &flat_img, &[1, 224, 224, 3]) .run(); let res_vec: Vec<u8> = session.get_output("MobilenetV1/Predictions/Reshape_1"); // Step 5: Find the food label that responds to the highest probability in res_vec // ... ... let mut label_lines = labels.lines(); for _i in 0..max_index { label_lines.next(); } // Step 6: Generate the output text let class_name = label_lines.next().unwrap().to_string(); if max_value > 50 { println!("It {} a <a href='https://www.google.com/search?q={}'>{}</a> in the picture", confidence.to_string(), class_name, class_name); } else { println!("It does not appears to be any food item in the picture."); } }

You can use the cargo tool to build the Rust program into WebAssembly bytecode or native code.

cd api/functions/image-classification/

cargo build --release --target wasm32-wasi

Copy the build artifacts to the api folder.

cp target/wasm32-wasi/release/classify.wasm ../../

Again, the api/pre.sh script installs WasmEdge runtime and its Tensorflow dependencies in this application. It also compiles the classify.wasm bytecode program to the classify.so native shared library at the time of deployment.

The api/hello.js file conforms Vercel serverless specification. It loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and passes the uploaded image data via STDIN. Notice api/hello.js runs the compiled classify.so file generated by api/pre.sh for better performance.

const fs = require('fs');

const { spawn } = require('child_process');

const path = require('path');

module.exports = (req, res) => {

const wasmedge = spawn(

path.join(__dirname, 'wasmedge-tensorflow-lite'),

[path.join(__dirname, 'classify.so')],

{env: {'LD_LIBRARY_PATH': __dirname}}

);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

res.setHeader('Content-Type', `text/plain`);

res.send(d.join(''));

});

wasmedge.stdin.write(req.body);

wasmedge.stdin.end('');

}

You can now deploy your forked repo to Vercel and have a web app for subject classification.

Next, it's your turn to use the vercel-wasm-runtime repo as a template to develop your own Rust serverless functions in Vercel. Looking forward to your great work.

WebAssembly Serverless Functions in Netlify

In this article we will show you two serverless functions in Rust and WasmEdge deployed on Netlify. One is the image processing function, the other one is the TensorFlow inference function.

For more insights on why WasmEdge on Netlify, please refer to the article WebAssembly Serverless Functions in Netlify.

Prerequisite

Since our demo WebAssembly functions are written in Rust, you will need a Rust compiler. Make sure that you install the wasm32-wasi compiler target as follows, in order to generate WebAssembly bytecode.

rustup target add wasm32-wasi

The demo application front end is written in Next.js, and deployed on Netlify. We will assume that you already have the basic knowledge of how to work with Next.js and Netlify.

Example 1: Image processing

Our first demo application allows users to upload an image and then invoke a serverless function to turn it into black and white. A live demo deployed on Netlify is available.

Fork the demo application’s GitHub repo to get started. To deploy the application on Netlify, just add your github repo to Netlify.

This repo is a standard Next.js application for the Netlify platform. The backend serverless function is in the api/functions/image_grayscale folder. The src/main.rs file contains the Rust program’s source code. The Rust program reads image data from the STDIN, and then outputs the black-white image to the STDOUT.

use hex; use std::io::{self, Read}; use image::{ImageOutputFormat, ImageFormat}; fn main() { let mut buf = Vec::new(); io::stdin().read_to_end(&mut buf).unwrap(); let image_format_detected: ImageFormat = image::guess_format(&buf).unwrap(); let img = image::load_from_memory(&buf).unwrap(); let filtered = img.grayscale(); let mut buf = vec![]; match image_format_detected { ImageFormat::Gif => { filtered.write_to(&mut buf, ImageOutputFormat::Gif).unwrap(); }, _ => { filtered.write_to(&mut buf, ImageOutputFormat::Png).unwrap(); }, }; io::stdout().write_all(&buf).unwrap(); io::stdout().flush().unwrap(); }

You can use Rust’s cargo tool to build the Rust program into WebAssembly bytecode or native code.

cd api/functions/image-grayscale/

cargo build --release --target wasm32-wasi

Copy the build artifacts to the api folder.

cp target/wasm32-wasi/release/grayscale.wasm ../../

The Netlify function runs

api/pre.shupon setting up the serverless environment. It installs the WasmEdge runtime, and then compiles each WebAssembly bytecode program into a nativesolibrary for faster execution.

The api/hello.js script loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and passes the uploaded image data via STDIN. Notice api/hello.js runs the compiled grayscale.so file generated by api/pre.sh for better performance.

const fs = require('fs');

const { spawn } = require('child_process');

const path = require('path');

module.exports = (req, res) => {

const wasmedge = spawn(

path.join(__dirname, 'wasmedge'),

[path.join(__dirname, 'grayscale.so')]);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

let buf = Buffer.concat(d);

res.setHeader('Content-Type', req.headers['image-type']);

res.send(buf);

});

wasmedge.stdin.write(req.body);

wasmedge.stdin.end('');

}

That's it. Deploy the repo to Netlify and you now have a Netlify Jamstack app with a high-performance Rust and WebAssembly based serverless backend.

Example 2: AI inference

The second demo application allows users to upload an image and then invoke a serverless function to classify the main subject on the image.

It is in the same GitHub repo as the previous example but in the tensorflow branch. The backend serverless function for image classification is in the api/functions/image-classification folder in the tensorflow branch. The src/main.rs file contains the Rust program’s source code. The Rust program reads image data from the STDIN, and then outputs the text output to the STDOUT. It utilizes the WasmEdge Tensorflow API to run the AI inference.

pub fn main() { // Step 1: Load the TFLite model let model_data: &[u8] = include_bytes!("models/mobilenet_v1_1.0_224/mobilenet_v1_1.0_224_quant.tflite"); let labels = include_str!("models/mobilenet_v1_1.0_224/labels_mobilenet_quant_v1_224.txt"); // Step 2: Read image from STDIN let mut buf = Vec::new(); io::stdin().read_to_end(&mut buf).unwrap(); // Step 3: Resize the input image for the tensorflow model let flat_img = wasmedge_tensorflow_interface::load_jpg_image_to_rgb8(&buf, 224, 224); // Step 4: AI inference let mut session = wasmedge_tensorflow_interface::Session::new(&model_data, wasmedge_tensorflow_interface::ModelType::TensorFlowLite); session.add_input("input", &flat_img, &[1, 224, 224, 3]) .run(); let res_vec: Vec<u8> = session.get_output("MobilenetV1/Predictions/Reshape_1"); // Step 5: Find the food label that responds to the highest probability in res_vec // ... ... let mut label_lines = labels.lines(); for _i in 0..max_index { label_lines.next(); } // Step 6: Generate the output text let class_name = label_lines.next().unwrap().to_string(); if max_value > 50 { println!("It {} a <a href='https://www.google.com/search?q={}'>{}</a> in the picture", confidence.to_string(), class_name, class_name); } else { println!("It does not appears to be any food item in the picture."); } }

You can use the cargo tool to build the Rust program into WebAssembly bytecode or native code.

cd api/functions/image-classification/

cargo build --release --target wasm32-wasi

Copy the build artifacts to the api folder.

cp target/wasm32-wasi/release/classify.wasm ../../

Again, the api/pre.sh script installs WasmEdge runtime and its Tensorflow dependencies in this application. It also compiles the classify.wasm bytecode program to the classify.so native shared library at the time of deployment.

The api/hello.js script loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and passes the uploaded image data via STDIN. Notice api/hello.js runs the compiled classify.so file generated by api/pre.sh for better performance.

const fs = require('fs');

const { spawn } = require('child_process');

const path = require('path');

module.exports = (req, res) => {

const wasmedge = spawn(

path.join(__dirname, 'wasmedge-tensorflow-lite'),

[path.join(__dirname, 'classify.so')],

{env: {'LD_LIBRARY_PATH': __dirname}}

);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

res.setHeader('Content-Type', `text/plain`);

res.send(d.join(''));

});

wasmedge.stdin.write(req.body);

wasmedge.stdin.end('');

}

You can now deploy your forked repo to Netlify and have a web app for subject classification.

Next, it's your turn to develop Rust serverless functions in Netlify using the netlify-wasm-runtime repo as a template. Looking forward to your great work.

WebAssembly Serverless Functions in AWS Lambda

In this article, we will show you two serverless functions in Rust and WasmEdge deployed on AWS Lambda. One is the image processing function, the other one is the TensorFlow inference function.

For the insight on why WasmEdge on AWS Lambda, please refer to the article WebAssembly Serverless Functions in AWS Lambda

Prerequisites

Since our demo WebAssembly functions are written in Rust, you will need a Rust compiler. Make sure that you install the wasm32-wasi compiler target as follows, in order to generate WebAssembly bytecode.

rustup target add wasm32-wasi

The demo application front end is written in Next.js, and deployed on AWS Lambda. We will assume that you already have the basic knowledge of how to work with Next.js and Lambda.

Example 1: Image processing

Our first demo application allows users to upload an image and then invoke a serverless function to turn it into black and white. A live demo deployed through GitHub Pages is available.

Fork the demo application’s GitHub repo to get started. To deploy the application on AWS Lambda, follow the guide in the repository README.

Create the function

This repo is a standard Next.js application. The backend serverless function is in the api/functions/image_grayscale folder. The src/main.rs file contains the Rust program’s source code. The Rust program reads image data from the STDIN, and then outputs the black-white image to the STDOUT.

use hex; use std::io::{self, Read}; use image::{ImageOutputFormat, ImageFormat}; fn main() { let mut buf = Vec::new(); io::stdin().read_to_end(&mut buf).unwrap(); let image_format_detected: ImageFormat = image::guess_format(&buf).unwrap(); let img = image::load_from_memory(&buf).unwrap(); let filtered = img.grayscale(); let mut buf = vec![]; match image_format_detected { ImageFormat::Gif => { filtered.write_to(&mut buf, ImageOutputFormat::Gif).unwrap(); }, _ => { filtered.write_to(&mut buf, ImageOutputFormat::Png).unwrap(); }, }; io::stdout().write_all(&buf).unwrap(); io::stdout().flush().unwrap(); }

You can use Rust’s cargo tool to build the Rust program into WebAssembly bytecode or native code.

cd api/functions/image-grayscale/

cargo build --release --target wasm32-wasi

Copy the build artifacts to the api folder.

cp target/wasm32-wasi/release/grayscale.wasm ../../

When we build the docker image,

api/pre.shis executed.pre.shinstalls the WasmEdge runtime, and then compiles each WebAssembly bytecode program into a nativesolibrary for faster execution.

Create the service script to load the function

The api/hello.js script loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and passes the uploaded image data via STDIN. Notice that api/hello.js runs the compiled grayscale.so file generated by api/pre.sh for better performance.

const { spawn } = require('child_process');

const path = require('path');

function _runWasm(reqBody) {

return new Promise(resolve => {

const wasmedge = spawn(path.join(__dirname, 'wasmedge'), [path.join(__dirname, 'grayscale.so')]);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

let buf = Buffer.concat(d);

resolve(buf);

});

wasmedge.stdin.write(reqBody);

wasmedge.stdin.end('');

});

}

The exports.handler part of hello.js exports an async function handler, used to handle different events every time the serverless function is called. In this example, we simply process the image by calling the function above and return the result, but more complicated event-handling behavior may be defined based on your need. We also need to return some Access-Control-Allow headers to avoid Cross-Origin Resource Sharing (CORS) errors when calling the serverless function from a browser. You can read more about CORS errors here if you encounter them when replicating our example.

exports.handler = async function(event, context) {

var typedArray = new Uint8Array(event.body.match(/[\da-f]{2}/gi).map(function (h) {

return parseInt(h, 16);

}));

let buf = await _runWasm(typedArray);

return {

statusCode: 200,

headers: {

"Access-Control-Allow-Headers" : "Content-Type,X-Amz-Date,Authorization,X-Api-Key,X-Amz-Security-Token",

"Access-Control-Allow-Origin": "*",

"Access-Control-Allow-Methods": "DELETE, GET, HEAD, OPTIONS, PATCH, POST, PUT"

},

body: buf.toString('hex')

};

}

Build the Docker image for Lambda deployment

Now we have the WebAssembly bytecode function and the script to load and connect to the web request. In order to deploy them as a function service on AWS Lambda, you still need to package the whole thing into a Docker image.

We are not going to cover in detail about how to build the Docker image and deploy on AWS Lambda, as there are detailed steps in the Deploy section of the repository README. However, we will highlight some lines in the Dockerfile for you to avoid some pitfalls.

FROM public.ecr.aws/lambda/nodejs:14

# Change directory to /var/task

WORKDIR /var/task

RUN yum update -y && yum install -y curl tar gzip

# Bundle and pre-compile the wasm files

COPY *.wasm ./

COPY pre.sh ./

RUN chmod +x pre.sh

RUN ./pre.sh

# Bundle the JS files

COPY *.js ./

CMD [ "hello.handler" ]

First, we are building the image from AWS Lambda's Node.js base image. The advantage of using AWS Lambda's base image is that it includes the Lambda Runtime Interface Client (RIC), which we need to implement in our Docker image as it is required by AWS Lambda. The Amazon Linux uses yum as the package manager.

These base images contain the Amazon Linux Base operating system, the runtime for a given language, dependencies and the Lambda Runtime Interface Client (RIC), which implements the Lambda Runtime API. The Lambda Runtime Interface Client allows your runtime to receive requests from and send requests to the Lambda service.

Second, we need to put our function and all its dependencies in the /var/task directory. Files in other folders will not be executed by AWS Lambda.

Third, we need to define the default command when we start our container. CMD [ "hello.handler" ] means that we will call the handler function in hello.js whenever our serverless function is called. Recall that we have defined and exported the handler function in the previous steps through exports.handler = ... in hello.js.

Optional: test the Docker image locally

Docker images built from AWS Lambda's base images can be tested locally following this guide. Local testing requires AWS Lambda Runtime Interface Emulator (RIE), which is already installed in all of AWS Lambda's base images. To test your image, first, start the Docker container by running:

docker run -p 9000:8080 myfunction:latest

This command sets a function endpoint on your local machine at http://localhost:9000/2015-03-31/functions/function/invocations.

Then, from a separate terminal window, run:

curl -XPOST "http://localhost:9000/2015-03-31/functions/function/invocations" -d '{}'

And you should get your expected output in the terminal.

If you don't want to use a base image from AWS Lambda, you can also use your own base image and install RIC and/or RIE while building your Docker image. Just follow Create an image from an alternative base image section from this guide.

That's it! After building your Docker image, you can deploy it to AWS Lambda following steps outlined in the repository README. Now your serverless function is ready to rock!

Example 2: AI inference

The second demo application allows users to upload an image and then invoke a serverless function to classify the main subject on the image.

It is in the same GitHub repo as the previous example but in the tensorflow branch. The backend serverless function for image classification is in the api/functions/image-classification folder in the tensorflow branch. The src/main.rs file contains the Rust program’s source code. The Rust program reads image data from the STDIN, and then outputs the text output to the STDOUT. It utilizes the WasmEdge Tensorflow API to run the AI inference.